Reference

Machine Theory of Mind

- Neil C. Rabinowitz et al. (2018)

- https://arxiv.org/abs/1802.07740

Theory of Mind May Have Spontaneously Emerged in Large Language Models

- Michal Kosinski (2023)

- https://arxiv.org/abs/2302.02083

Theory of Mind for Multi-Agent Collaboration via Large Language Models

- Huao Li, Yu Quan Chong, Simon Stepputtis et al. (2024)

- https://arxiv.org/abs/2310.10701

Theory of Mind in Large Language Models: Examining Performance of 11 State-of-the-Art models vs. Children Aged 7–10 on Advanced Tests

- Max van Duijn, Bram van Dijk, Tom Kouwenhoven et al. (2023)

- https://arxiv.org/abs/2310.20320

LLM Theory of Mind and Alignment: Opportunities and Risks

- Winnie Street (2024)

- https://arxiv.org/abs/2405.08154

LLMs achieve adult human performance on higher-order theory of mind tasks

- Winnie Street, John Oliver Siy, Geoff Keeling et al. (2024)

- https://arxiv.org/abs/2405.18870

Can Polite Prompts Lead to Higher-Quality LLM Responses? – AI Theory of Mind Perspective

- Shuyuan Zhao & Qingfei Ming (PACIS 2025)

- https://aisel.aisnet.org/pacis2025/hci/hci/16

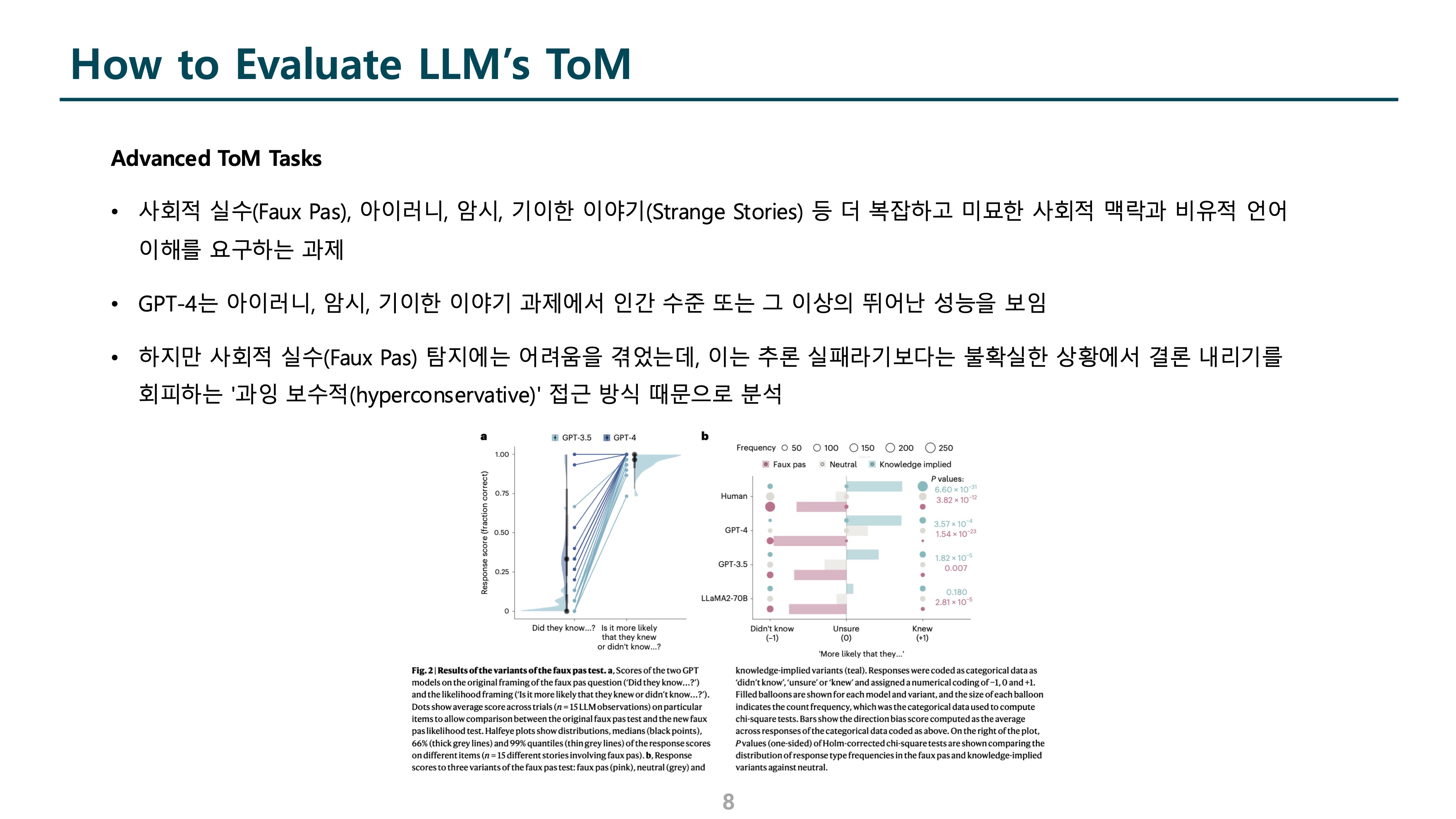

Testing theory of mind in large language models and humans

- James W. A. Strachan, Dalila Albergo, Giulia Borghini et al. (2024)

- https://www.nature.com/articles/s41562-024-01882-z

'NLP' 카테고리의 다른 글

| [2025-2] Min-K%++ (1) | 2025.08.31 |

|---|---|

| [2025-2] 한영웅 - Investigating Data Contamination for Pre-training Language Models (Arxiv 2024) (0) | 2025.08.23 |

| [2025-2] 백승우 - ReTool: Reinforcement Learning for Strategic Tool Use in LLMs (1) | 2025.07.29 |

| [2025-2] 박지원 - QLORA (0) | 2025.07.17 |

| [2025-2] 박제우 - GRAPH ATTENTION NETWORKS (0) | 2025.07.13 |